How to Tokenize Japanese in Python

Over the past several years there's been a welcome trend in NLP projects to be broadly multi-lingual. However, even when many languages are supported, there's a few that tend to be left out. One of these is Japanese. Japanese is written without spaces, and deciding where one word ends and another begins is not trivial. While highly accurate tokenizers are available, they can be hard to use, and English documentation is scarce. This is a short guide to tokenizing Japanese in Python that should be enough to get you started adding Japanese support to your application.

Photo by Alexandre .L on Unsplash

Note: An expanded version of this article was published at EMNLP 2020, you can find the PDF here.

Getting Ready

First, you'll need to install a tokenizer and a dictionary. For this tutorial we'll use fugashi with unidic-lite, both projects I maintain. You can install them like this:

pip install fugashi[unidic-lite]

Fugashi comes with a script so you can test it out at the command line. Type in some Japanese and the output will have one word per line, along with other information like part of speech.

> fugashi

麩菓子は、麩を主材料とした日本の菓子。

麩 フ フ 麩 名詞-普通名詞-一般

菓子 カシ カシ 菓子 名詞-普通名詞-一般

は ワ ハ は 助詞-係助詞

、 、 補助記号-読点

麩 フ フ 麩 名詞-普通名詞-一般

を オ ヲ を 助詞-格助詞

主材 シュザイ シュザイ 主材 名詞-普通名詞-一般

料 リョー リョウ 料 接尾辞-名詞的-一般

と ト ト と 助詞-格助詞

し シ スル 為る 動詞-非自立可能 サ行変格 連用形-一般

た タ タ た 助動詞 助動詞-タ 連体形-一般

日本 ニッポン ニッポン 日本 名詞-固有名詞-地名-国

の ノ ノ の 助詞-格助詞

菓子 カシ カシ 菓子 名詞-普通名詞-一般

。 。 補助記号-句点

EOS

The EOS stands for "end of sentence", though fugashi is not actually

performing sentence tokenization; in this case it just marks the end of the

input.

Sample Code

Now we're ready to get started with converting plain Japanese text into a list of words in Python.

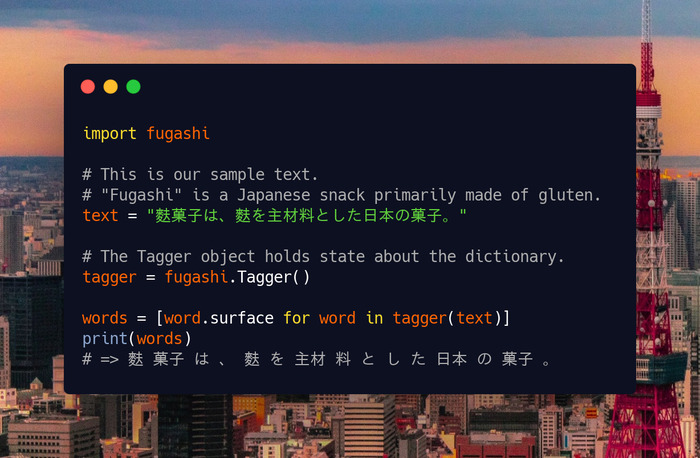

import fugashi

# This is our sample text.

# "Fugashi" is a Japanese snack primarily made of gluten.

text = "麩菓子は、麩を主材料とした日本の菓子。"

# The Tagger object holds state about the dictionary.

tagger = fugashi.Tagger()

words = [word.surface for word in tagger(text)]

print(*words)

# => 麩 菓子 は 、 麩 を 主材 料 と し た 日本 の 菓子 。

This prints the original sentence with spaces inserted between words. In many cases, that's all you need, but fugashi provides a lot of other information, such as part of speech, lemmas, broad etymological category, pronunciation, and more. This information all comes from UniDic, a dictionary provided by the National Institute for Japanese Language and Linguistics (NINJAL).

fugashi is a wrapper for MeCab, a C++ Japanese tokenizer. MeCab is doing all the hard work here, but fugashi wraps it to make it more Pythonic, easier to install, and to clarify some common error cases.

You may wonder why part of speech and other information is included by default. In the classical NLP pipeline for languages like English, tokenization is a separate step before part of speech tagging. In Japanese, however, knowing part of speech is important in getting tokenization right, so they're conventionally solved as a joint task. This is why Japanese tokenizers are often referred to as "morphological analyzers" (形態素解析器).

Notes on Japanese Tokenization

There are several things about Japanese tokenization that may be surprising if you're used to languages like English.

Lemmas May Not Resemble the Words in the Text at All

Here's how you get lemma information with fugashi:

import fugashi

tagger = fugashi.Tagger()

text = "麩を用いた菓子は江戸時代からすでに存在していた。"

print("input:", text)

for word in tagger(text):

# feature is a named tuple holding all the Unidic info

print(word.surface, word.feature.lemma, sep="\t")

And here's the output of the script:

input: 麩を用いた菓子は江戸時代からすでに存在していた。

麩 麩

を を

用い 用いる

た た

菓子 菓子

は は

江戸 エド

時代 時代

から から

すでに 既に

存在 存在

し 為る

て て

い 居る

た た

。 。

You can see that 用い has 用いる as a lemma, and that し has 為る and い has 居る, handling both inflection and orthographic variation. すでに is not inflected, but the lemma uses the kanji form 既に.

These lemmas come from UniDic, which by convention uses the "dictionary form" of a word for lemmas. This is typically in kanji even if the word isn't usually written in kanji because the kanji form is considered less ambiguous. For example, この ("this [thing]") has 此の as a lemma, even though normal modern writing would never use that form. This is also true of 為る in the above example.

This can be surprising if you aren't familiar with Japanese, but it's not a problem. It is worth keeping in mind if your application ever shows lemmas to your user for any reason, though, as it may not be in a form they expect.

Another thing to keep in mind is that most lemmas in Japanese deal with orthographic rather than inflectional variation. This orthographic variation is called "hyoukiyure" and causes problems similar to spelling errors in English.

Verbs Will Often Be Multiple Tokens

Any inflection of a verb will result in multiple tokens. This can also affect adjectives that inflect, like 赤い. You can see this in the verbs at the end of the previous example, or in this more compact example:

input: 見た ("looked" or "saw")

output:

見 ミ ミル 見る 動詞-非自立可能 上一段-マ行 連用形-一般

た タ タ た 助動詞 助動詞-タ 終止形-一般

This would be like if "looked" was tokenized into "look" and "ed" in English. This feels strange even to native Japanese speakers, but it's common to all modern tokenizers. The main reason for this is that verb inflections are extremely regular, so registering verb stems and verb parts separately in the dictionary makes dictionary maintenance easier and the tokenizer implementation simpler and faster. It also works better in the rare case an unknown verb shows up. (Verbs are a closed class in Japanese, which means new verbs aren't common.)

In the early 90s several tokenizers handled verb morphology directly, but that approach has been abandoned over time because of the above advantages of the fine-grained approach. Depending on your application needs you can use some simple rules to lump verb parts together or just discard non-stem parts as stop words.

The Tagger Object Has a Startup Cost

It's fast enough that you won't notice for one invocation, but creating the Tagger is a lot of work for the computer. When processing text in a loop it's important you re-use the Tagger rather than creating a new Tagger for each input.

# Don't do this

for text in texts:

tagger = fugashi.Tagger()

words = tagger(text)

# Do this instead

tagger = fugashi.Tagger()

for text in texts:

words = tagger(text)

If you follow the second pattern MeCab shouldn't be a speed bottleneck for normal applications.

Always Note Your Tokenizer Details

If you publish a resource using tokenized Japanese text, always be careful to mention what tokenizer and what dictionary you used so your results can be replicated. Saying you used MeCab isn't enough information to reproduce your results, because there are many different dictionaries for MeCab that can give completely different results. Even if you specify the dictionary, it's critical that you specify the version too, since popular dictionaries like UniDic may be updated over time. If you want to know more you can read my article about Japanese tokenizer dictionaries.

Hopefully that's enough to get you started with tokenizing Japanese. If you have trouble, feel free to file an issue or contact me. I'm glad to help out with open source projects as time allows, and for commercial projects you can hire me to handle the integration directly. Ψ